DALL·E: Creating Images from Text

DALL·E[1] is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs. We’ve found that it has a diverse set of capabilities, including creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.

GPT-3 showed that language can be used to instruct a large neural network to perform a variety of text generation tasks. Image GPT showed that the same type of neural network can also be used to generate images with high fidelity. We extend these findings to show that manipulating visual concepts through language is now within reach.

Overview

Like GPT-3, DALL·E is a transformer language model. It receives both the text and the image as a single stream of data containing up to 1280 tokens, and is trained using maximum likelihood to generate all of the tokens, one after another.[2] This training procedure allows DALL·E to not only generate an image from scratch, but also to regenerate any rectangular region of an existing image that extends to the bottom-right corner, in a way that is consistent with the text prompt.

We recognize that work involving generative models has the potential for significant, broad societal impacts. In the future, we plan to analyze how models like DALL·E relate to societal issues like economic impact on certain work processes and professions, the potential for bias in the model outputs, and the longer term ethical challenges implied by this technology.

Capabilities

We find that DALL·E is able to create plausible images for a great variety of sentences that explore the compositional structure of language. We illustrate this using a series of interactive visuals in the next section. The samples shown for each caption in the visuals are obtained by taking the top 32 of 512 after reranking with CLIP, but we do not use any manual cherry-picking, aside from the thumbnails and standalone images that appear outside.[3]

Controlling attributes

We test DALL·E’s ability to modify several of an object’s attributes, as well as the number of times that it appears.

images

For several of the visuals in this post, we find that repeating the caption, sometimes with alternative phrasings, improves the consistency of the results.

images

images

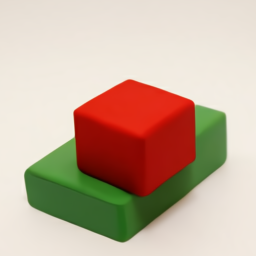

Drawing multiple objects

Simultaneously controlling multiple objects, their attributes, and their spatial relationships presents a new challenge. For example, consider the phrase “a hedgehog wearing a red hat, yellow gloves, blue shirt, and green pants.” To correctly interpret this sentence, DALL·E must not only correctly compose each piece of apparel with the animal, but also form the associations (hat, red), (gloves, yellow), (shirt, blue), and (pants, green) without mixing them up.[4] We test DALL·E’s ability to do this for relative positioning, stacking objects, and controlling multiple attributes.

images

images

images

While DALL·E does offer some level of controllability over the attributes and positions of a small number of objects, the success rate can depend on how the caption is phrased. As more objects are introduced, DALL·E is prone to confusing the associations between the objects and their colors, and the success rate decreases sharply. We also note that DALL·E is brittle with respect to rephrasing of the caption in these scenarios: alternative, semantically equivalent captions often yield no correct interpretations.

Visualizing perspective and three-dimensionality

We find that DALL·E also allows for control over the viewpoint of a scene and the 3D style in which a scene is rendered.

images

images

To push this further, we test DALL·E’s ability to repeatedly draw the head of a well-known figure at each angle from a sequence of equally spaced angles, and find that we can recover a smooth animation of the rotating head.

images

DALL·E appears to be able to apply some types of optical distortions to scenes, as we see with the options “fisheye lens view” and “a spherical panorama.” This motivated us to explore its ability to generate reflections.

images

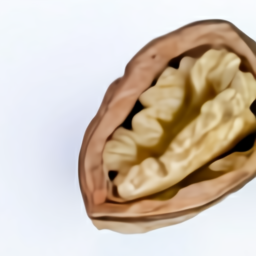

Visualizing internal and external structure

The samples from the “extreme close-up view” and “x-ray” style led us to further explore DALL·E’s ability to render internal structure with cross-sectional views, and external structure with macro photographs.

images

images

Inferring contextual details

The task of translating text to images is underspecified: a single caption generally corresponds to an infinitude of plausible images, so the image is not uniquely determined. For instance, consider the caption “a painting of a capybara sitting on a field at sunrise.” Depending on the orientation of the capybara, it may be necessary to draw a shadow, though this detail is never mentioned explicitly. We explore DALL·E’s ability to resolve underspecification in three cases: changing style, setting, and time; drawing the same object in a variety of different situations; and generating an image of an object with specific text written on it.

images

images

images

Generally, the longer the string that DALL·E is prompted to write, the lower the success rate. We find that the success rate improves when parts of the caption are repeated. Additionally, the success rate sometimes improves as the sampling temperature for the image is decreased, although the samples become simpler and less realistic.

With varying degrees of reliability, DALL·E provides access to a subset of the capabilities of a 3D rendering engine via natural language. It can independently control the attributes of a small number of objects, and to a limited extent, how many there are, and how they are arranged with respect to one another. It can also control the location and angle from which a scene is rendered, and can generate known objects in compliance with precise specifications of angle and lighting conditions.

Unlike a 3D rendering engine, whose inputs must be specified unambiguously and in complete detail, DALL·E is often able to “fill in the blanks” when the caption implies that the image must contain a certain detail that is not explicitly stated.

Applications of preceding capabilities

Next, we explore the use of the preceding capabilities for fashion and interior design.

images

DALL·E also seems to occasionally confuse less common colors with other neighboring shades. For example, when prompted to draw clothes in “navy,” DALL·E sometimes uses lighter shades of blue, or shades very close to black. Similarly, DALL·E sometimes confuses “olive” with shades of brown or brighter shades of green.

images

images

images

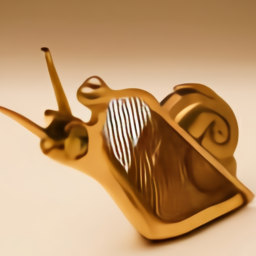

Combining unrelated concepts

The compositional nature of language allows us to put together concepts to describe both real and imaginary things. We find that DALL·E also has the ability to combine disparate ideas to synthesize objects, some of which are unlikely to exist in the real world. We explore this ability in two instances: transferring qualities from various concepts to animals, and designing products by taking inspiration from unrelated concepts.

images

In a previous section, we saw that as more objects are introduced into the scene, DALL·E is liable to confuse the associations between the objects and their specified attributes. Here, we see a different sort of failure mode: sometimes, rather than binding some attribute of the specified concept (say, “a faucet”) to the animal (say, “a snail”), DALL·E just draws the two as separate items.

images

When generating some of these objects, such as “an armchair in the shape of an avocado”, DALL·E appears to relate the shape of a half avocado to the back of the chair, and the pit of the avocado to the cushion. We find that DALL·E is susceptible to the same kinds of mistakes mentioned in the previous visual.

Animal illustrations

In the previous section, we explored DALL·E’s ability to combine unrelated concepts when generating images of real-world objects. Here, we explore this ability in the context of art, for three kinds of illustrations: anthropomorphized versions of animals and objects, animal chimeras, and emojis.

images

We find it interesting how DALL·E adapts human body parts onto animals. For example, when asked to draw a daikon radish blowing its nose, sipping a latte, or riding a unicycle, DALL·E often draws the kerchief, hands, and feet in plausible locations.

images

We also find that inserting the phrase “professional high quality” before “illustration” and “emoji” sometimes improves the quality and consistency of the results.

images

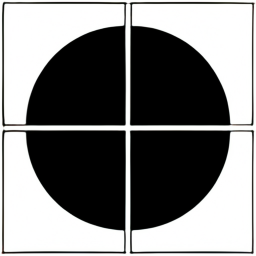

Zero-shot visual reasoning

GPT-3 can be instructed to perform many kinds of tasks solely from a description and a cue to generate the answer supplied in its prompt, without any additional training. For example, when prompted with the phrase “here is the sentence ‘a person walking his dog in the park’ translated into French:”, GPT-3 answers “un homme qui promène son chien dans le parc.” This capability is called zero-shot reasoning. We find that DALL·E extends this capability to the visual domain, and is able to perform several kinds of image-to-image translation tasks when prompted in the right way.

images

Other transformations, such as “animal with sunglasses” and “animal wearing a bow tie,” require placing the accessory on the correct part of the animal’s body. Those that only change the color of the animal, such as “animal colored pink,” are less reliable, but show that DALL·E is sometimes capable of segmenting the animal from the background. Finally, the transformations “a sketch of the animal” and “a cell phone case with the animal” explore the use of this capability for illustrations and product design.

images

We did not anticipate that this capability would emerge, and made no modifications to the neural network or training procedure to encourage it. Motivated by these results, we measure DALL·E’s aptitude for analogical reasoning problems by testing it on Raven’s progressive matrices, a visual IQ test that saw widespread use in the 20th century.

images

DALL·E is often able to solve matrices that involve continuing simple patterns or basic geometric reasoning, such as those in sets B and C. It is sometimes able to solve matrices that involve recognizing permutations and applying boolean operations, such as those in set D. The instances in set E tend to be the most difficult, and DALL·E gets almost none of them correct.

For each of the sets, we measure DALL·E’s performance on both the original images, and the images with the colors inverted. The inversion of colors should pose no additional difficulty for a human, yet does generally impair DALL·E’s performance, suggesting its capabilities may be brittle in unexpected ways.

Geographic knowledge

We find that DALL·E has learned about geographic facts, landmarks, and neighborhoods. Its knowledge of these concepts is surprisingly precise in some ways and flawed in others.

images

images

images

Temporal knowledge

In addition to exploring DALL·E’s knowledge of concepts that vary over space, we also explore its knowledge of concepts that vary over time.

images

Summary of approach and prior work

DALL·E is a simple decoder-only transformer that receives both the text and the image as a single stream of 1280 tokens—256 for the text and 1024 for the image—and models all of them autoregressively. The attention mask at each of its 64 self-attention layers allows each image token to attend to all text tokens. DALL·E uses the standard causal mask for the text tokens, and sparse attention for the image tokens with either a row, column, or convolutional attention pattern, depending on the layer. We plan to provide more details about the architecture and training procedure in an upcoming paper.

Text-to-image synthesis has been an active area of research since the pioneering work of Reed et. al, whose approach uses a GAN conditioned on text embeddings. The embeddings are produced by an encoder pretrained using a contrastive loss, not unlike CLIP. StackGAN and StackGAN++ use multi-scale GANs to scale up the image resolution and improve visual fidelity. AttnGAN incorporates attention between the text and image features, and proposes a contrastive text-image feature matching loss as an auxiliary objective. This is interesting to compare to our reranking with CLIP, which is done offline. Other work incorporates additional sources of supervision during training to improve image quality. Finally, work by Nguyen et. al and Cho et. al explores sampling-based strategies for image generation that leverage pretrained multimodal discriminative models.

Similar to the rejection sampling used in VQVAE-2, we use CLIP to rerank the top 32 of 512 samples for each caption in all of the interactive visuals. This procedure can also be seen as a kind of language-guided search, and can have a dramatic impact on sample quality.

images

Go to Source

Author: OpenAI

https://openai.com/blog/dall-e/