We’ve released new versions of GPT-3 and Codex which can edit or insert content into existing text, rather than just completing existing text. These new capabilities make it practical to use the OpenAI API to revise existing content, such as rewriting a paragraph of text or refactoring code. This unlocks new use cases and improves existing ones; for example, insertion is already being piloted in GitHub Copilot with promising early results.

Read Insert Documentation

Try in Playground

def___

fib(10)def fib(n):

if n

fib(10)=>def fib(n):

if n

fib(10)=>def fib(n):

if n

fib(10)=>def fib(n):

if n

fib(10)=>def fib(n):

if n

fib(10)=>def fib(n):

if n

fib(10)=>def fib(n):

if n

fib(10)=>def fib(n):

if n

fib(10)=>def fib(n, memo={}):

if n in memo:

return memo[n]

if n =>def fib(n, memo={}):

if n in memo:

return memo[n]

if n =>def fib(n, memo={}):

if n in memo:

return memo[n]

if n =>def fib(n, memo={}):

if n in memo:

return memo[n]

if n =>def fib(n, memo={}):

if n in memo:

return memo[n]

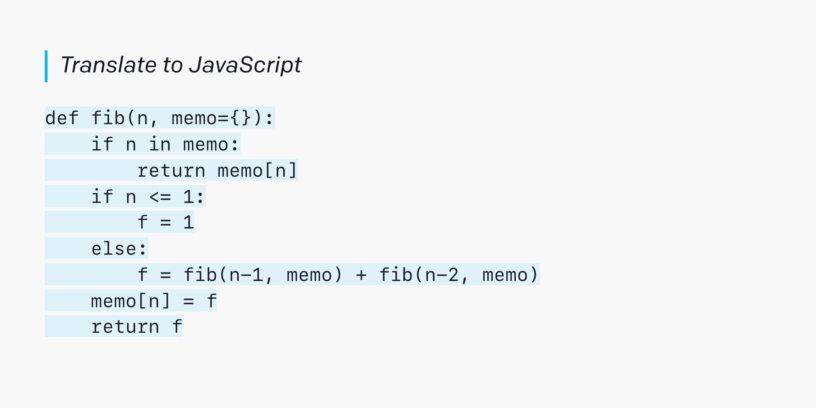

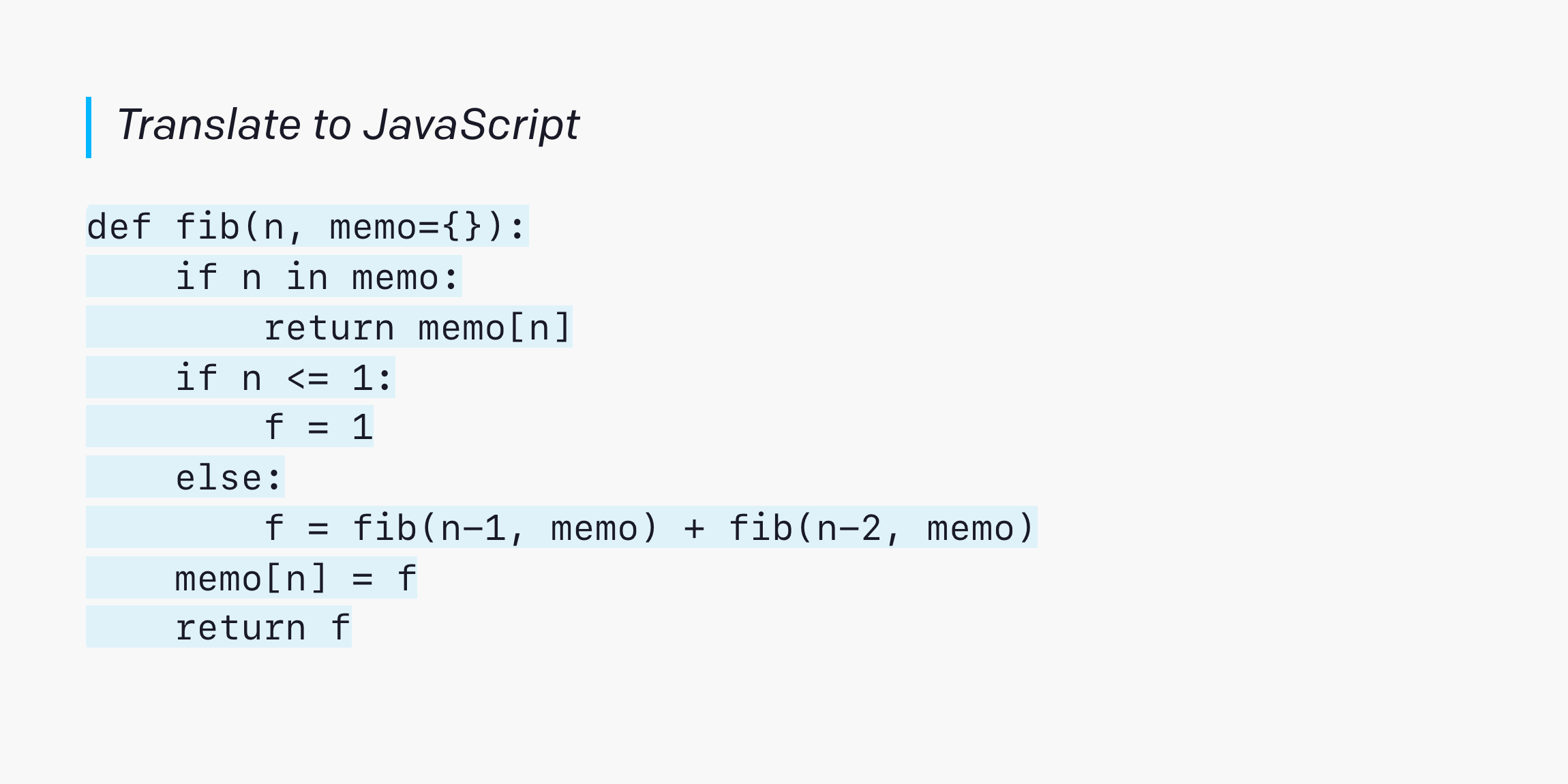

if n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

if (n in memo) return memo[n];

var f;

if (n =>function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

return n in memo ? memo[n] : (memo[n] = n =>

function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

return n in memo ? memo[n] : (memo[n] = n =>/

function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

return n in memo ? memo[n] : (memo[n] = n =>/**

function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

return n in memo ? memo[n] : (memo[n] = n =>/**___

function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

return n in memo ? memo[n] : (memo[n] = n =>/**

* Recursive Fibonacci function with memoization.

* @param {number} n

* @returns {number}

*/

function fibonacci(n) {

var memo = {};

return (function fib(n, memo) {

return n in memo ? memo[n] : (memo[n] = n =>

GPT-3 and Codex have traditionally added text to the end of existing content, based on the text that came before. Whether working with text or code, writing is more than just appending—it’s an iterative process where existing text is revised. GPT-3 and Codex can now edit text, changing what’s currently there or adding text to the middle of content.

Inserting into the middle of text

The new insert capability adds contextually relevant text in the middle of existing content. Providing future context to the model can improve the quality of completions for applications such as writing long-form text, transitioning between paragraphs, following an outline, or guiding the model toward an ending.

Today’s the big day!___

Today’s the big day! Congratulations to all our high school graduates!

We hope you enjoy your graduation ceremonies and wish you the best of luck in your future endeavors.

Today’s the big day! Congratulations to all our high school graduates!

We hope you enjoy your graduation ceremonies and wish you the best of luck in your future endeavors.

Section

Today’s the big day! Congratulations to all our high school graduates!

We hope you enjoy your graduation ceremonies and wish you the best of luck in your future endeavors.

Section 2.

Today’s the big day! Congratulations to all our high school graduates!

We hope you enjoy your graduation ceremonies and wish you the best of luck in your future endeavors.

Section 2. Moving

Today’s the big day! Congratulations to all our high school graduates!

We hope you enjoy your graduation ceremonies and wish you the best of luck in your future endeavors.

Section 2. Moving to

Today’s the big day! Congratulations to all our high school graduates!

We hope you enjoy your graduation ceremonies and wish you the best of luck in your future endeavors.

Section 2. Moving to San Francisco

Today’s the big day! I’m graduating from high school. I’m so excited. I’m going to move to San Francisco and start a new life. I can’t wait to get there.

Section 2. Moving to San Francisco

Today’s the big day! I’m graduating from high school. I’m so excited. I’m going to move to San Francisco and start a new life. I can’t wait to get there.

Section 2. Moving to

Today’s the big day! I’m graduating from high school. I’m so excited. I’m going to move to San Francisco and start a new life. I can’t wait to get there.

Section 2. Moving to Istanbul

Today’s the big day! I’m graduating from high school. I’m so excited to be moving on to the next chapter of my life. I’m going to Istanbul!

Section 2. Moving to Istanbul

Today’s the big day! I’m graduating from high school. I’m so excited to be moving on to the next chapter of my life. I’m going to Istanbul!

Section 2. Moving to

Today’s the big day! I’m graduating from high school. I’m so excited to be moving on to the next chapter of my life. I’m going to Istanbul!

Section 2. Moving to the

Today’s the big day! I’m graduating from high school. I’m so excited to be moving on to the next chapter of my life. I’m going to Istanbul!

Section 2. Moving to the farm

Today’s the big day! My high school graduation! I’m so excited! I can’t wait to leave the city and move to the farm. I want to live a simple, quiet life. I want to get up in the morning, milk the cows, feed the horses, then sit and watch the sunrise. I want to spend the rest of my days traveling the world, reading and writing. I’m so tired of this city life.

Section 2. Moving to the farm

In the example above, the desire is to fill-in text between two section headers of an outline. Without the context of future sections, the model generates a completion that isn’t relevant to the second section. When the context of future sections are accounted for, the model generates a completion that ties the two sections together.

def get_files(path: str, size: int):

def___

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)def get_files(path: str, size: int):

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)

def get_files(path: str, size: int):

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)___

def get_files(path: str, size: int):

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)import os

def get_files(path: str, size: int):

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)import os

def get_files(path: str, size: int):

"""

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)import os

def get_files(path: str, size: int):

"""___

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)import os

def get_files(path: str, size: int):

"""___"""

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)import os

def get_files(path: str, size: int):

"""Yields files in the path tree of min size"""

def prune(dirp, files):

for file in files:

file = os.path.join(dirp, file)

if os.path.getsize(file) > size:

yield file

for (dirp, _, files) in os.walk(path):

yield from prune(dirp, files)

Insert is particularly useful for writing code. In fact, Codex was our original motivation for developing this capability, since in software development we typically add code to the middle of an existing file where code is present before and after the completion. In the example above, the model successfully completes the missing function prune, while connecting to code already written. We also add a docstring and missing imports, which is not possible without knowing the code that comes after. In GitHub Copilot, Insert is currently being piloted with early promising results.

The insert capability is available in the API today in beta, as part of the completions endpoint and via a new interface in Playground. The capability can be used with the latest versions of GPT-3 and Codex, text-davinci-002 and code-davinci-002. Pricing is the same as previous versions of Davinci.

Editing existing text

A meaningful part of writing text and code is spent editing existing content. We’ve released a new endpoint in beta called edits that changes existing text via an instruction, instead of completing it.

That’s pretty good at writing replies

When it’s asked a question

It gives its suggestion

This is a poem it made that rhymes

That’s pretty good at writing replies

When it’s asked a question

It gives its suggestion

This is a poem it made that rhymes

That’s pretty good at writing replies

When it’s asked a question

It gives its suggestion

This is a poem it made that rhymes

That’s pretty good at writing replies

When it’s asked a question

It gives its suggestion

This is a poem it made that rhymes

That’s pretty good at writing replies

When it’s asked a question

It gives its suggestion

This is a poem it made that rhymes

That’s pretty good at writing replies

When it’s asked a question

It gives its suggestion

This is a poem it made that rhymes

That’s pretty good at writing replies

When it’s asked a question

It gives its suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem it made that rhymes

I am very nice AI

I am pretty good at writing replies

When I am asked a question

I give my suggestion

This is a poem I made that rhymes

Kind regards,

GPT-3

Editing works by specifying existing text as a prompt and an instruction on how to modify it. The edits endpoint can be used to change the tone or structure of text, or make targeted changes like fixing spelling. We’ve also observed edits to work well on empty prompts, thus enabling text generation similar to the completions endpoint. In the example above, we use edits to (1) add a poem, (2) change the poem to be in first-person, (3) transform the poem into a letter, with the appropriate salutation and signature.

The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)The three US cities with the worst traffic are:

1. Boston, MA (164 hours)

2. Washington, DC (155 hours)

3. Chicago, IL (138 hours)[

{"rank": 1, "city": "Boston", "state": "MA", "hours": 164},

{"rank": 2, "city": "Washington DC", "state": "DC", "hours": 155},

{"rank": 3, "city": "Chicago", "state": "IL", "hours": 138}

][

{"rank": 1, "city": "Boston", "state": "MA", "hours": 164},

{"rank": 2, "city": "Washington DC", "state": "DC", "hours": 155},

{"rank": 3, "city": "Chicago", "state": "IL", "hours": 138}

][

{"rank": 1, "city": "Boston", "state": "MA", "hours": 164},

{"rank": 2, "city": "Washington DC", "state": "DC", "hours": 155},

{"rank": 3, "city": "Chicago", "state": "IL", "hours": 138}

][

{"rank": 1, "city": "Boston", "state": "MA", "hours": 164},

{"rank": 2, "city": "Washington DC", "state": "DC", "hours": 155},

{"rank": 3, "city": "Chicago", "state": "IL", "hours": 138}

][

{"rank": 1, "city": "Boston", "state": "MA", "hours": 164},

{"rank": 2, "city": "Washington DC", "state": "DC", "hours": 155},

{"rank": 3, "city": "Chicago", "state": "IL", "hours": 138}

][

{"rank": 1, "city": "Boston", "state": "MA", "hours": 164},

{"rank": 2, "city": "Washington DC", "state": "DC", "hours": 155},

{"rank": 3, "city": "Chicago", "state": "IL", "hours": 138}

][

{"city": "Boston", "state": "Massachusetts", "hours": 164},

{"city": "Washington DC", "state": "District of Columbia", "hours": 155},

{"city": "Chicago", "state": "Illinois", "hours": 138}

][

{"city": "Boston", "state": "Massachusetts", "hours": 164},

{"city": "Washington DC", "state": "District of Columbia", "hours": 155},

{"city": "Chicago", "state": "Illinois", "hours": 138}

][

{"city": "Boston", "state": "Massachusetts", "hours": 164},

{"city": "Washington DC", "state": "District of Columbia", "hours": 155},

{"city": "Chicago", "state": "Illinois", "hours": 138}

][

{"city": "Boston", "state": "Massachusetts", "hours": 164},

{"city": "Washington DC", "state": "District of Columbia", "hours": 155},

{"city": "Chicago", "state": "Illinois", "hours": 138}

][

{"city": "Boston", "state": "Massachusetts", "hours": 164},

{"city": "Washington DC", "state": "District of Columbia", "hours": 155},

{"city": "Chicago", "state": "Illinois", "hours": 138}

][

{"city": "Boston", "state": "Massachusetts", "hours": 164},

{"city": "Washington DC", "state": "District of Columbia", "hours": 155},

{"city": "Chicago", "state": "Illinois", "hours": 138}

][

{"city": "Boston", "state": "Massachusetts", "hours": 164},

{"city": "Washington DC", "state": "District of Columbia", "hours": 155},

{"city": "Chicago", "state": "Illinois", "hours": 138}

]def get_yaml():

return """

- city: Boston

state: Massachusetts

hours: 164

- city: Washington DC

state: District of Columbia

hours: 155

- city: Chicago

state: Illinois

hours: 138

"""

The edits endpoint is particularly useful for writing code. It works well for tasks like refactoring, adding documentation, translating between programming languages, and changing coding style. The example above starts with JSON input containing cities ranked by population. With our first edit, Codex removes the rank field from the JSON, and changes the state abbreviations into full names. The second edit converts the JSON file into YAML returned from a function.

Editing is available as a specialized endpoint in the API and through a new interface in Playground. It is supported by models text-davinci-edit-001 and code-davinci-edit-001. The edits endpoint is currently free to use and publicly available as a beta.

Go to Source

Author: Mohammad Bavarian

https://openai.com/blog/gpt-3-edit-insert/