When machine learning (ML) is deployed in production, monitoring the model is important for maintaining the quality of predictions. Although the statistical properties of the training data are known in advance, real-life data can gradually deviate over time and impact the prediction results of your model, a phenomenon known as data drift. Detecting these conditions in production can be challenging and time-consuming, and requires a system that captures incoming real-time data, performs statistical analyses, defines rules to detect drift, and sends alerts for rule violations. Furthermore, the process must be repeated for every new iteration of the model.

Amazon SageMaker Model Monitor enables you to continuously monitor ML models in production. You can set alerts to detect deviations in the model quality and take corrective actions, such as retraining models, auditing upstream systems, or fixing data quality issues. You can use insights from Model Monitor to proactively determine model prediction variance due to data drift and then use Amazon Augmented AI (Amazon A2I), a fully managed feature in Amazon SageMaker, to send ML inferences to human workflows for review. You can use Amazon A2I for multiple purposes, such as:

- Reviewing results below a threshold

- Human oversight and audit use cases

- Augmenting AI and ML results as required

In this post, we show how to set up an ML workflow on Amazon SageMaker to train an XGBoost algorithm for breast cancer predictions. We deploy the model on a real-time inference endpoint, launch a model monitoring schedule, evaluate monitoring results, and trigger a human review loop for below-threshold predictions. We then show how the human loop workers review and update the predictions.

We walk you through the following steps using this accompanying Jupyter notebook:

- Preprocess your input dataset.

- Train an XGBoost model and deploy to a real-time endpoint.

- Generate baselines and start Model Monitor.

- Review the model monitor reports and derive insights.

- Set up a human review loop for low-confidence detection using Amazon A2I.

Prerequisites

Before getting started, you need to create your human workforce and set up your Amazon SageMaker Studio notebook.

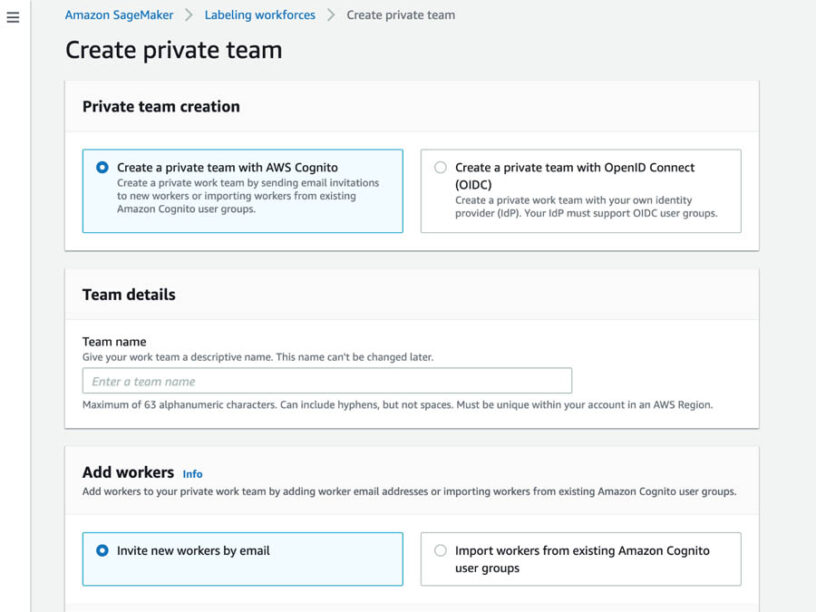

Creating your human workforce

For this post, you create a private work team and add only one user (you) to it. For instructions, see Create an Amazon Cognito Workforce Using the Labeling Workforces Page.

Enter your email in the email addresses box for workers. To invite your colleagues to participate in reviewing tasks, include their email addresses in this box.

After you create your private team, you receive an email from no-reply@verificationemail.com that contains your workforce username, password, and a link that you can use to log in to the worker portal. Enter the username and password you received in the email to log in. You must then create a new, non-default password. This is your private worker’s interface.

When you create an Amazon A2I human review task using your private team (explained in the Starting a human loop section), your task should appear in the Jobs section. See the following screenshot.

After you create your private workforce, you can view it on the Labeling workforces page, on the Private tab.

Setting up your Amazon SageMaker Studio notebook

To set up your notebook, complete the following steps:

- Onboard to Amazon SageMaker Studio with the quick start procedure.

- When you create an AWS Identity and Access Management (IAM) role to the notebook instance, be sure to specify access to Amazon Simple Storage Service (Amazon S3). You can choose Any S3 Bucket or specify the S3 bucket you want to enable access to. You can use the AWS-managed policies AmazonSageMakerFullAccess and AmazonAugmentedAIFullAccess to grant general access to these two services.

- When user is created and is active, choose Open Studio.

- On the Studio landing page, from the File drop-down menu, choose New.

- Choose Terminal.

- In the terminal, enter the following code:

- Open the notebook by choosing Amazon-A2I-with-Amazon-SageMaker-Model-Monitor.ipynb in the

amazon-a2i-sample-jupyter-notebooksfolder.

Preprocessing your input dataset

You can follow the steps in this post using the accompanying Jupyter notebook. Make sure you provide an S3 bucket and a prefix of your choice. We then import the Python data science libraries and the Amazon SageMaker Python SDK that we need to run through our use case.

Loading the dataset

For this post, we use a dataset for breast cancer predictions from the UCI Machine Learning Repository. Please refer to the accompanying Jupyter notebook for the code to load and split this dataset. Based on the input features, we first train a model to detect a benign (label=0) or malignant (label=1) condition.

The following screenshot shows some of the rows in the training dataset.

Training and deploying an Amazon SageMaker XGBoost model

XGBoost (eXtreme Gradient Boosting) is a popular and efficient open-source implementation of the gradient boosted trees algorithm. For our use case, we use the binary:logistic objective. The model applies logistic regression for binary classification (in this example, whether a condition is benign or malignant). The output is a probability that represents the log likelihood of the Bernoulli distribution.

With Amazon SageMaker, you can use XGBoost as a built-in algorithm or framework. For this use case, we use the built-in algorithm. To specify the Amazon Elastic Container Registry (Amazon ECR) container location for Amazon SageMaker implementation of XGBoost, enter the following code:

Creating the XGBoost estimator

We use the XGBoost container to construct an estimator using the Amazon SageMaker Estimator API and initiate a training job (the full walkthrough is available in the accompanying Jupyter notebook):

Specifying hyperparameters and starting training

We can now specify the hyperparameters for our training. You set hyperparameters to facilitate the estimation of model parameters from data. See the following code:

For more information, see XGBoost Parameters.

Deploying the XGBoost model

We deploy a model that’s hosted behind a real-time inference endpoint. As a prerequisite, we set up a data_capture_config for the Model Monitor after the endpoint is deployed, which enables Amazon SageMaker to collect the inference requests and responses for use in Model Monitor. For more information, see the accompanying notebook.

The deploy function returns a Predictor object that you can use for inference:

Invoking the deployed model using the endpoint

You can now send data to this endpoint to get inferences in real time. The request and response payload, along with some additional metadata, is saved in the Amazon S3 location that you specified in DataCaptureConfig. You can follow the steps in the walkthrough notebook.

The following JSON code is an example of an inference request and response captured:

Starting Amazon SageMaker Model Monitor

Amazon SageMaker Model Monitor continuously monitors the quality of ML models in production. To start using Model Monitor, we create a baseline, inspect the baseline job results, and create a monitoring schedule.

Creating a baseline

The baseline calculations of statistics and constraints are needed as a standard against which data drift and other data quality issues can be detected. The training dataset is usually a good baseline dataset. The training dataset schema and the inference dataset schema should match (the number and order of the features). From the training dataset, you can ask Amazon SageMaker to suggest a set of baseline constraints and generate descriptive statistics to explore the data. To create the baseline, you can follow the detailed steps in the walkthrough notebook. See the following code:

Inspecting baseline job results

When the baseline job is complete, we can inspect the results. Two files are generated:

- statistics.json – This file is expected to have columnar statistics for each feature in the dataset that is analyzed. For the schema of this file, see Schema for Statistics.

- constraints.json – This file is expected to have the constraints on the features observed. For the schema of this file, see Schema for Constraints.

Model Monitor computes per column/feature statistics. In the following screenshot, c0 and c1 in the name column refer to columns in the training dataset without the header row.

The constraints file is used to express the constraints that a dataset must satisfy. See the following screenshot.

Next we review the monitoring configuration in the constraints.json file:

- datatype_check_threshold – During the baseline step, the generated constraints suggest the inferred data type for each column. You can tune the

monitoring_config.datatype_check_thresholdparameter to adjust the threshold for when it’s flagged as a violation. - domain_content_threshold – If there are more unknown values for a String field in the current dataset than in the baseline dataset, you can use this threshold to dictate if it needs to be flagged as a violation.

- comparison_threshold – This value is used to calculate model drift.

For more information about constraints, see Schema for Constraints.

Create a monitoring schedule

With a monitoring schedule, Amazon SageMaker can start processing jobs at a specified frequency to analyze the data collected during a given period. Amazon SageMaker compares the dataset for the current analysis with the baseline statistics and constraints provided and generates a violations report. To create an hourly monitoring schedule, enter the following code:

We then invoke the endpoint continuously to generate traffic for the model monitor to pick up. Because we set up an hourly schedule, we need to wait at least an hour for traffic to be detected.

Reviewing model monitoring

The violations file is generated as the output of a MonitoringExecution, which lists the results of evaluating the constraints (specified in the constraints.json file) against the current dataset that was analyzed. For more information about violation checks, see Schema for Violations. For our use case, the model monitor detects a data type mismatch violation in one of the requests sent to the endpoint. See the following screenshot.

For more details, see the walkthrough notebook.

Evaluating the results

To determine the next steps for our experiment, we should consider the following two perspectives:

- Model Monitor violations: We only saw the datatype_check violation from the Model Monitor; we didn’t see a model drift violation. In our use case, Model Monitor uses the robust comparison method based on the two-sample K-S test to quantify the distance between the empirical distribution of our test dataset and the cumulative distribution of the baseline dataset. This distance didn’t exceed the value set for the comparison_threshold. The prediction results are aligned with the results in the training dataset.

- Probability distribution of prediction results: We used a test dataset of 114 requests. Out of this, we see that the model predicts 60% of the requests to be malignant (over 90% probability output in the prediction results), 30% benign (less than 10% probability output in the prediction results), and the remaining 10% of the requests are indeterminate. The following chart summarizes these findings.

As a next step, you need to send the prediction results that are distributed with output probabilities of over 10% and less than 90% (because the model can’t predict with sufficient confidence) to a domain expert who can look at the model results and identify if the tumor is benign or malignant. You use Amazon A2I to set up a human review workflow and define conditions for activating the review loop.

Starting the human review workflow

To configure your human review workflow, you complete the following high-level steps:

- Create the human task UI.

- Create the workflow definition.

- Set the trigger conditions to activate the human loop.

- Start your human loop.

- Check that the human loop tasks are complete.

Creating the human task UI

The following example code shows how to create a human task UI resource, giving a UI template in liquid HTML. This template is rendered to the human workers whenever a human loop is required. You can follow through the complete steps using the accompanying Jupyter notebook. After the template is defined, set up the UI task function and run it.

Creating the workflow definition

We create the flow definition to specify the following:

- The workforce that your tasks are sent to.

- The instructions that your workforce receives. This is specified using a worker task template.

- Where your output data is stored.

See the following code:

Setting trigger conditions for human loop activation

We need to send the prediction results that are distributed with output probabilities of over 10% and under 90% (because the model can’t predict with sufficient confidence in this range). We use this as our activation condition, as shown in the following code:

Starting a human loop

A human loop starts your human review workflow and sends data review tasks to human workers. See the following code:

The workers in this use case are domain experts that can validate the request features and determine if the result is malignant or benign. The task requires reviewing the model predictions, agreeing or disagreeing, and updating the prediction as 1 for malignant and 0 for benign. The following screenshot shows a sample of tasks received.

The following screenshot shows updated predictions.

For more information about task UI design for tabular datasets, see Using Amazon SageMaker with Amazon Augmented AI for human review of Tabular data and ML predictions.

Checking the status of task completion and human loop

To check the status of the task and the human loop, enter the following code:

When the human loop tasks are complete, we inspect the results of the review and the corrections made to prediction results.

You can use the human-labeled output to augment the training dataset for retraining. This keeps the distribution variance within the threshold and prevents data drift, thereby improving model accuracy. For more information about using Amazon A2I outputs for model retraining, see Object detection and model retraining with Amazon SageMaker and Amazon Augmented AI.

Cleaning up

To avoid incurring unnecessary charges, delete the resources used in this walkthrough when not in use, including the following:

Conclusion

This post demonstrated how you can use Amazon SageMaker Model Monitor and Amazon A2I to set up a monitoring schedule for your Amazon SageMaker model endpoints; specify baselines that include constraint thresholds; observe inference traffic; derive insights such as model drift, completeness, and data type violations; and send the low-confidence predictions to a human workflow with labelers to review and update the results. For video presentations, sample Jupyter notebooks, and more information about use cases like document processing, content moderation, sentiment analysis, object detection, text translation, and more, see Amazon A2I Resources.

References

[1] Dua, D. and Graff, C. (2019). UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science.

About the Authors

Prem Ranga is an Enterprise Solutions Architect at AWS based out of Houston, Texas. He is part of the Machine Learning Technical Field Community and loves working with customers on their ML and AI journey. Prem is passionate about robotics, is an Autonomous Vehicles researcher, and also built the Alexa-controlled Beer Pours in Houston and other locations.

Prem Ranga is an Enterprise Solutions Architect at AWS based out of Houston, Texas. He is part of the Machine Learning Technical Field Community and loves working with customers on their ML and AI journey. Prem is passionate about robotics, is an Autonomous Vehicles researcher, and also built the Alexa-controlled Beer Pours in Houston and other locations.

Jasper Huang is a Technical Writer Intern at AWS and a student at the University of Pennsylvania pursuing a BS and MS in computer science. His interests include cloud computing, machine learning, and how these technologies can be leveraged to solve interesting and complex problems. Outside of work, you can find Jasper playing tennis, hiking, or reading about emerging trends.

Jasper Huang is a Technical Writer Intern at AWS and a student at the University of Pennsylvania pursuing a BS and MS in computer science. His interests include cloud computing, machine learning, and how these technologies can be leveraged to solve interesting and complex problems. Outside of work, you can find Jasper playing tennis, hiking, or reading about emerging trends.

Talia Chopra is a Technical Writer in AWS specializing in machine learning and artificial intelligence. She works with multiple teams in AWS to create technical documentation and tutorials for customers using Amazon SageMaker, MxNet, and AutoGluon. In her free time, she enjoys meditating, studying machine learning, and taking walks in nature.

Talia Chopra is a Technical Writer in AWS specializing in machine learning and artificial intelligence. She works with multiple teams in AWS to create technical documentation and tutorials for customers using Amazon SageMaker, MxNet, and AutoGluon. In her free time, she enjoys meditating, studying machine learning, and taking walks in nature.

Leave a Reply