If you’re like me, you may have noticed that AI has become a part of daily life. I wake up each morning and ask my smart assistant about the weather. I recently applied for a new credit card and the credit limit was likely determined by a machine learning model. And while typing the previous sentence, I got a word choice suggestion that “probably” might flow better than “likely,” a suggestion powered by AI.

As a member of Google’s Responsible Innovation team, I think a lot about how AI works and how to develop it responsibly. Recently, I spoke with Patrick Gage Kelley, Head of Research Strategy on Google’s Trust & Safety team, to learn more about developing products that help people recognize and understand AI in their daily lives.

How do you help people navigate a world with so much AI?

My goal is to ensure that people, at a basic level, know how AI works and how it impacts their lives. AI systems can be really complicated, but the goal of explaining AI isn’t to get everyone to become programmers and understand all of the technical details — it’s to make sure people understand the parts that matter to them.

When AI makes a decision that affects people (whether it’s recommending a video or qualifying for a loan), we want to explain how that decision was made. And we don’t want to just provide a complicated technical explanation, but rather, information that is meaningful, helpful, and equips people to act if needed.

We also want to find the best times to explain AI. Our goal is to help people develop AI literacy early, including in primary and secondary education. And when people use products that rely on AI (everything from online services to medical devices), we want to include a lot of chances for people to learn about the role AI plays, as well as its benefits and limitations. For example, if people are told early on what kinds of mistakes AI-powered products are likely to make, then they are better prepared to understand and remedy situations that might arise.

Do I need to be a mathematician or programmer to have a meaningful understanding of AI?

No! A good metaphor here is financial literacy. While we may not need to know every detail of what goes into interest rate hikes or the intricacies of financial markets, it’s important to know how they impact us — from paying off credit cards, to buying a home, or paying for student loans. In the same way, AI explainability isn’t about understanding every technical aspect of a machine learning algorithm – it’s about knowing how to interact with it and how it impacts our daily lives.

How should AI practitioners — developers, designers, researchers, students, and others — think about AI explainability?

Lots of practitioners are doing important work on explainability. Some focus on interpretability, making it easier to identify specific factors that influence a decision. Others focus on providing “in-the-moment explanations” right when AI makes a decision. These can be helpful, especially when carefully designed. However, AI systems are often so complex that we can’t rely on in-the-moment explanations entirely. It’s just too much information to pack into a single moment. Instead, AI education and literacy should be incorporated into the entire user journey and built continuously throughout a person’s life.

More generally, AI practitioners should think about AI explainability as fundamental to the design and development of the entire product experience. At Google, we use our AI Principles to guide responsible technology development. In accordance with AI Principle #4: “Be accountable to people,” we encourage AI practitioners to think about all the moments and ways they can help people understand how AI operates and makes decisions.

How are you and your collaborators working to improve explanations of AI?

We develop resources that help AI practitioners learn creative ways to incorporate AI explainability in product design. For example, in the PAIR Guidebook we launched a series of ethical case studies to help AI practitioners think through tricky issues and hone their skills for explaining AI. We also do fundamental research like this paper to learn more about how people perceive AI as a decision-maker, and what values they would like AI-powered products to embody.

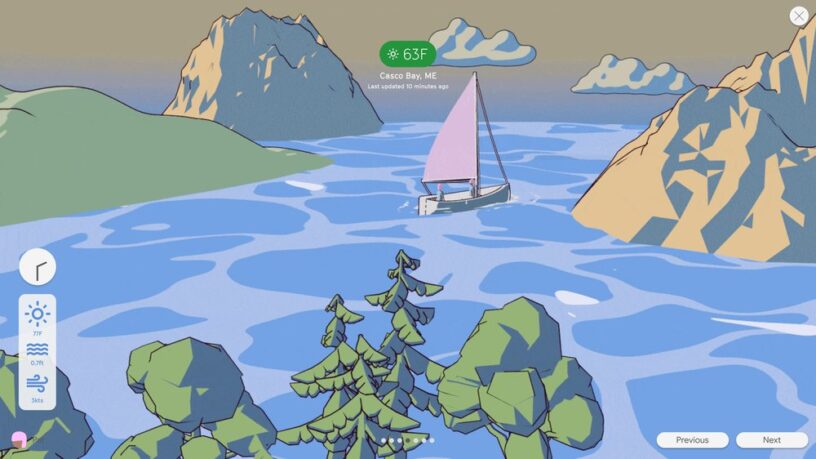

We’ve learned that many AI practitioners want concrete examples of good explanations of AI that they can build on, so we’re currently developing a story-driven visual design toolkit for explanations of a fictional AI app. The toolkit will be publicly available, so teams in startups and tech companies everywhere can prioritize explainability in their work.

The visual design toolkit provides story-driven examples of good explanations of AI.

I want to learn more about AI explainability. Where should I start?

This February, we released an Applied Digital Skills lesson, “Discover AI in Daily Life.” It’s a great place to start for anyone who wants to learn more about how we interact with AI everyday.

We also hope to speak about AI explainability at the upcoming South by Southwest Conference. Our proposed session would dive deeper into these topics, including our visual design toolkit for product designers. If you’re interested in learning more about AI explainability and our work, you can vote for our proposal through the SXSW PanelPicker® here.