This guest post was authored by Kazuma Ohara, Director of RENGA Inc., and edited by Yumiko Kanasugi, Solutions Architect at AWS Japan.

RENGA Inc. operates Mansion Note, one of Japan’s most popular condominium review and rating websites, which gets over a million unique visitors per month. Mansion Note provides a service where people can check reviews and rankings of condominiums and apartments all over Japan. People of all positions, such as current residents, former residents, neighbors, experts, real estate agents, and property owners, clarify their positions first and then post and share their candid opinions and reviews about the condominiums. This “wisdom of crowds” is expected to help potential buyers and tenants better imagine their new home before moving in, and can help eliminate regrets such as, “This is different from what I expected,” or, “I should have chosen a different condo.”

The company has a total of six engineers, including myself. Code reviews have been an essential process in our development, because RENGA takes code quality very seriously. The company, however, used to face a challenge in which code review tasks increased in proportion to the quantity of development, which led to an increased burden on reviewers. Also, no matter how many times we reviewed code, some bugs remained unnoticed, so we needed a mechanism to conduct code reviews more exhaustively.

We saw the announcement of Amazon CodeGuru at re:Invent 2019. The moment we learned that CodeGuru is a machine learning (ML)-based code review service, we knew it was exactly the tool we were looking for. At the time of the announcement, we were making significant improvements to our source code, so we thought that CodeGuru Reviewer might help us with that. We decided to adopt the tool, and it pointed out issues that neither our members nor other static analytics tools had ever detected. In this post, I talk about why RENGA decided to adopt CodeGuru, as well as its adoption process.

Maintaining code quality

RENGA was founded in 2012; 2020 marks its 8th anniversary. Although our product is getting mature, we still invest a lot of resources in development so we can extend features quickly. We not only accelerate the development cycle, but also give the same level of priority to maintaining code quality. When extending features, poor quality code adds complexity to the system and can become a technical debt. On the other hand, as long as consistent code quality is maintained, scaling the system doesn’t prevent developers from extending features, because the code itself is simple. Keeping the balance between agility and quality is important, especially for startups that continuously release new features.

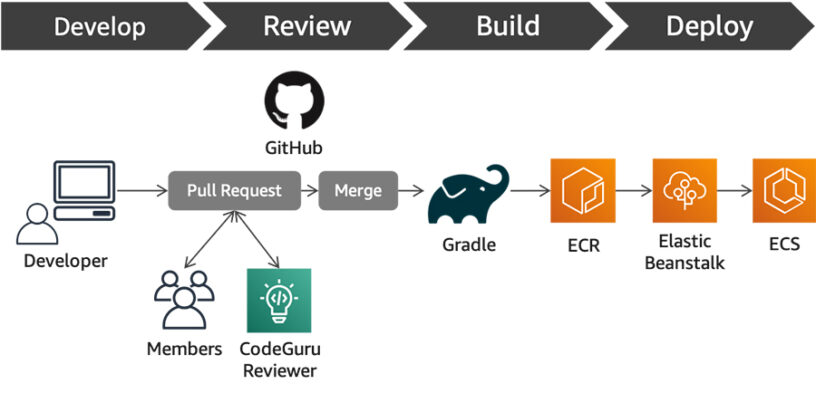

RENGA has a two-step code review process. When a developer commits a fix to our remote repository on GitHub and makes a pull request, two senior members review it first. Then I do the final check and merge it into the primary branch (unless I find an issue). We also check the minimum coding rules using Checkstyle during the build phase.

In the past, we had a challenge with the cost and quality of code reviews. As the amount of code increased at RENGA, the burden on reviewers also increased. We spend about 5% of our development time on code reviews, and reviewers spend an additional 1 hour a day on average for code reviews. Code reviews can, however, be a bottleneck when we want to quickly release new features and promptly deliver those values to our users. Also, the more code we need to review, the less accurate it is to identify issues, and some issues may even be overlooked. One solution is to increase reviewers, but that isn’t easy because code reviews require not only extensive business and technical knowledge, but also an understanding of the top modules. So we needed an automated tool that could offload the reviewers’ workload.

Adopting CodeGuru Reviewer

CodeGuru Reviewer is an automated tool that you can seamlessly integrate into your development pipeline, so we found it very easy to adopt the tool. We had high expectations that the tool may not only solve cost issues, but also help us gain insights from a different perspective than humans because the tool is based on ML. The tool was still in the preview stage, but upon being offered a free tier, we decided to try it out.

The following architecture illustrates RENGA’s development pipeline.

Setting up CodeGuru Reviewer is very easy. All you need to do is select a repository on GitHub and associate it with the tool. AWS recommends that you create a new GitHub user for CodeGuru Reviewer before associating a GitHub repository with the tool. CodeGuru Reviewer is enabled after the association is complete.

The following screenshot shows the Associate repository page on the CodeGuru console.

CodeGuru Reviewer is triggered by a pull request. The tool provides recommendations by adding comments to the pull request, usually within 15 minutes after the request is made.

The following text is a recommendation that CodeGuru Reviewer generated:

The part of the code the recommendation pointed out was actually not a bottleneck, but it did help us improve performance. Learning this method has helped our developers code better with more confidence.

The following text is another example of a recommendation from CodeGuru Reviewer:

Although there was no actual resource leakage, we modified the part that was pointed out to clarify that no leakage will occur, which improved the code readability.

After adopting CodeGuru Reviewer, we feel that the product generates fewer recommendations compared to other existing static analytics tools, but it provides highly accurate recommendations with less false-positive advice. Too many recommendations require extra time for triage, so accurate recommendations are a big bonus for busy developers.

Summary

Although the code review process is important, it shouldn’t increase the workload for reviewers and become a bottleneck in development. By adopting CodeGuru Reviewer, we successfully automated code reviews and reduced reviewers’ workloads. Furthermore, learning the best practices of coding—which we weren’t aware of—has helped us develop with more confidence. Going forward, we plan on measuring metrics such as cyclomatic complexity so we can provide higher-quality services to our customers promptly. We also expect that CodeGuru Reviewer will further expand its recommendation items.

About the Authors

Kazuma Ohara is the Director of RENGA Inc., an internet services company headquartered in Japan.

Yumiko Kanasugi is a Solutions Architect with Amazon Web Services Japan, supporting digital native business customers to utilize AWS.

Yumiko Kanasugi is a Solutions Architect with Amazon Web Services Japan, supporting digital native business customers to utilize AWS.

Leave a Reply