The new Amazon SageMaker Studio Image Build convenience package allows data scientists and developers to easily build custom container images from your Studio notebooks via a new CLI. The new CLI eliminates the need to manually set up and connect to Docker build environments for building container images in Amazon SageMaker Studio.

Amazon SageMaker Studio provides a fully integrated development environment for machine learning (ML). Amazon SageMaker offers a variety of built-in algorithms, built-in frameworks, and the flexibility to use any algorithm or framework by bringing your own container images. The Amazon SageMaker Studio Image Build CLI lets you build Amazon SageMaker-compatible Docker images directly from your Amazon SageMaker Studio environments. Prior to this feature, you could only build your Docker images from Amazon Studio notebooks by setting up and connecting to secondary Docker build environments.

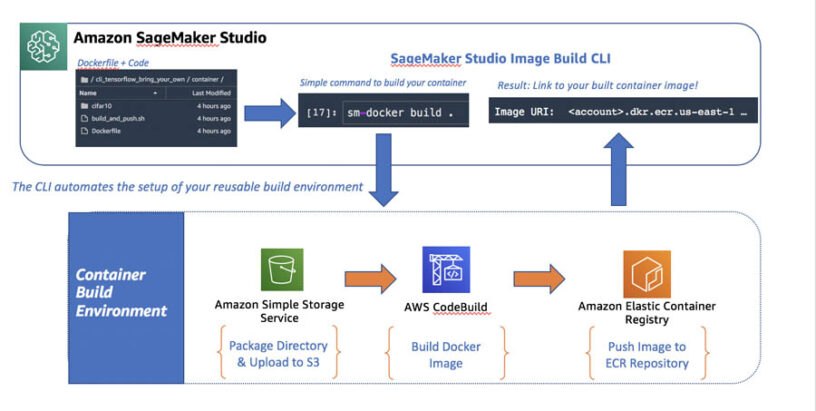

You can now easily create container images directly from Amazon SageMaker Studio by using the simple CLI. The CLI abstracts the previous need to set up a secondary build environment and allows you to focus and spend time on the ML problem you’re trying to solve as opposed to creating workflows for Docker builds. The new CLI automatically sets up your reusable build environment that you interact with via high-level commands. You essentially tell the CLI to build your image, without having to worry about the underlying workflow orchestrated through the CLI, and the output is a link to your Amazon Elastic Container Registry (Amazon ECR) image location. The following diagram illustrates this architecture.

The CLI uses the following underlying AWS services:

- Amazon S3 – The new CLI packages your Dockerfile and container code, along with a buildspec.yml file used by AWS CodeBuild, into a .zip file stored in Amazon Simple Storage Service (Amazon S3). By default, this file is automatically cleaned up following the build to avoid unnecessary storage charges.

- AWS CodeBuild – CodeBuild is a fully managed build environment that allows you to build Docker images using a transient build environment. CodeBuild is dependent on a buildspec.yml file that contains build commands and settings that it uses to run your build. The new CLI takes care of automatically generating this file. The CLI automatically kicks off the container build using the packaged files from Amazon S3. CodeBuild pricing is pay-as-you-go and based on build minutes and the build compute used. By default, the CLI uses

general1.smallcompute. - Amazon ECR – Built Docker images are tagged and pushed to Amazon ECR. Amazon SageMaker expects training and inference images to be stored in Amazon ECR, so after the image is successfully pushed to the repository, you’re ready to go. The CLI returns a link to the URI of the image that you can include in your Amazon SageMaker training and hosting calls.

Now that we’ve outlined the underlying AWS services and benefits of using the new Amazon SageMaker Studio Image Build convenience package to abstract your container build environments, let’s explore how to get started using the CLI!

Prerequisites

To use the CLI, we need to ensure the Amazon SageMaker execution role used by your Studio notebook environment (or another AWS Identity and Access Management (IAM) role, if you prefer) has the required permissions to interact with the resources used by the CLI, including access to CodeBuild and Amazon ECR.

Your role should have a trust policy with CodeBuild. See the following code:

You also need to make sure the appropriate permissions are included in your role to run the build in CodeBuild, create a repository in Amazon ECR, and push images to that repository. The following code is an example policy that you should modify as necessary to meet your needs and security requirements:

You must also install the package in your Studio notebook environment to be able use the convenience package. To install, simply use pip install within your notebook environment:

Using the CLI

After completing these prerequisites, you’re ready to start taking advantage of the new CLI to easily build your custom bring-your-own Docker images from Amazon SageMaker Studio without worrying about the underlying setup and configuration of build services.

To use the CLI, you can navigate to the directory containing your Dockerfile and enter the following code:

sm-docker build .

Alternatively, you can explicitly identify the path to your Dockerfile using the --file argument:

sm-docker build . --file /path/to/Dockerfile

It’s that simple! The command automatically logs build output to your notebook and returns the image URI of your Docker image. See the following code:

The CLI takes care of the rest. Let’s take a deeper look at what the CLI is actually doing. The following diagram illustrates this process.

The workflow contains the following steps:

- The CLI automatically zips the directory containing your Dockerfile, generates the buildspec for AWS CodeBuild, and adds the .zip package the final .zip file. By default, the final .zip package is put in the Amazon SageMaker default session S3 bucket. Alternatively, you can specify a custom bucket using the

--bucketargument. - After packaging your files for build, the CLI creates an ECR repository if one doesn’t exist. By default, the ECR repository created has the naming convention of

sagemaker-studio-<studioID>. The final step performed by the CLI is to create a temporary build project in CodeBuild and start the build, which builds your container image, tags it, and pushes it to the ECR repository.

The great part about the CLI is you no longer have to set any of this up or worry about the underlying activities to easily build your container images from Amazon SageMaker Studio.

You can also optionally customize your build environment by using supported arguments such as the following code:

Changes from Amazon SageMaker classic notebooks

To help illustrate the changes required when moving from bring-your-own Amazon SageMaker example notebooks or your own custom developed notebooks, we’ve provided two example notebooks showing the changes required to use the Amazon SageMaker Studio Image Build CLI:

- The TensorFlow Bring Your Own example notebook is based on the existing TensorFlow Bring Your Own and adapted to use the new CLI with Amazon SageMaker Studio.

- The BYO XGBoost notebook demonstrates a typical data science user flow of data exploration and feature engineering, model training using a custom XGBoost container built using the CLI, and using Amazon SageMaker batch transform for offline or batch inference.

The key change required to adapt your existing notebooks to use the new CLI in Amazon SageMaker Studio removes the need for the build_and_push.sh script in your directory structure. The build_and_push.sh script used in classic notebook instances is used to build your Docker image and push it to Amazon ECR, which is now replaced by the new CLI for Studio. The following image compares the directory structures.

Summary

This post discussed how you can simplify the build of your Docker images from Amazon SageMaker Studio by using the new Amazon SageMaker Studio Image Build CLI convenience package. It abstracts the setup of your Docker build environments by automatically setting up the underlying services and workflow necessary for building Docker images. This package allows you to interact with an abstracted build environment through simple CLI commands in Amazon SageMaker Studio so you can focus on building models! For more information, see the GitHub repo.

About the Authors

Shelbee Eigenbrode is a solutions architect at Amazon Web Services (AWS). Her current areas of depth include DevOps combined with machine learning and artificial intelligence. She’s been in technology for 22 years, spanning multiple roles and technologies. In her spare time she enjoys reading, spending time with her family, friends and her fur family (aka. dogs).

Jaipreet Singh is a Senior Software Engineer on the Amazon SageMaker Studio team. He has been working on Amazon SageMaker since its inception in 2017 and has contributed to various Project Jupyter open-source projects. In his spare time, he enjoys hiking and skiing in the PNW.

Sam Liu is a product manager at Amazon Web Services (AWS). His current focus is the infrastructure and tooling of machine learning and artificial intelligence. Beyond that, he has 10 years of experience building machine learning applications in various industries. In his spare time, he enjoys making short videos for technical education or animal protection.

Sam Liu is a product manager at Amazon Web Services (AWS). His current focus is the infrastructure and tooling of machine learning and artificial intelligence. Beyond that, he has 10 years of experience building machine learning applications in various industries. In his spare time, he enjoys making short videos for technical education or animal protection.

Stefan Natu is a Sr. Machine Learning Specialist at Amazon Web Services. He is focused on helping financial services customers build and operationalize end-to-end machine learning solutions on AWS. His academic background is in theoretical physics, and in the past, he worked on a number of data science problems in retail and energy verticals. In his spare time, he enjoys reading machine learning blogs, traveling, playing the guitar, and exploring the food scene in New York City.

Stefan Natu is a Sr. Machine Learning Specialist at Amazon Web Services. He is focused on helping financial services customers build and operationalize end-to-end machine learning solutions on AWS. His academic background is in theoretical physics, and in the past, he worked on a number of data science problems in retail and energy verticals. In his spare time, he enjoys reading machine learning blogs, traveling, playing the guitar, and exploring the food scene in New York City.

Leave a Reply